Whatever you are studying right now, if you are not getting up to speed on deep learning, neural networks, etc., you lose. We are going through the process where software will automate software, automation will automate automation.

-Mark Cuban

The current pace of AI is fast. Yet, most people may not realize that machine learning (which powers AI systems) has become a dominant force in the technology landscape over the past decade or so, transforming industries and influencing various aspects of our daily lives. While research and progress on machine learning has been ongoing for decades, the recent rapid rise is capturing the attention of the world. Let’s dig into the factors that have allowed machine learning to improve so rapidly compared to other fields.

Leaderboard Effect

Ease of Experimentation

Decreased Cost of Computation

Broad Applications

Leaderboard Effect

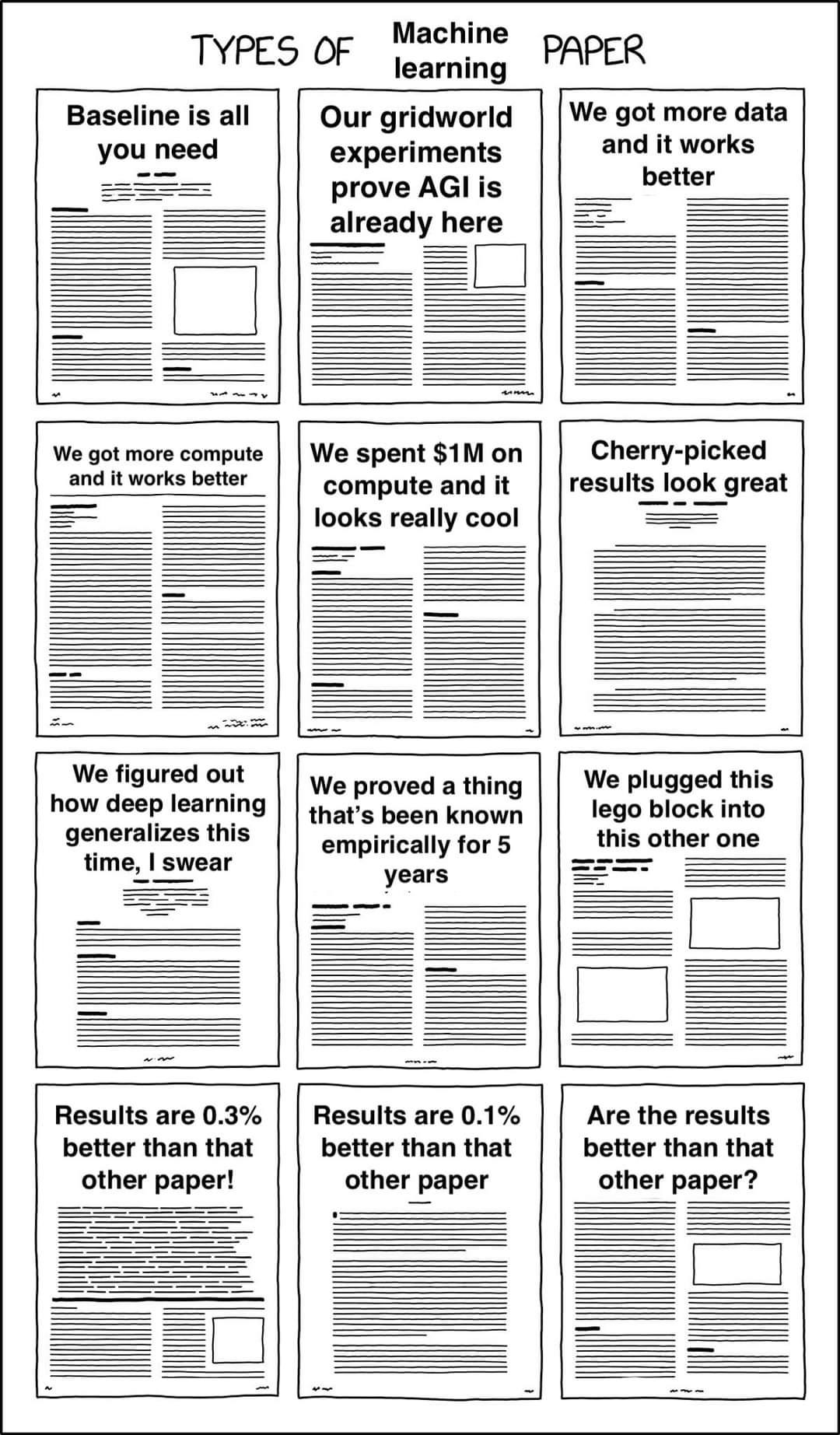

One advantage of machine learning compared to most other fields is that different researchers can test their methods on the same exact data. This has the explicit effect of letting researchers compare which ideas and algorithms perform best on a given dataset. This was the basis for Kaggle. Papers have also proliferated around beating metrics for a given dataset (see Figure 1).

By providing and publishing a metric to track against, researchers can do two things. First, they are able to judge their methods against a set of benchmarks and determine if their ideas are good enough to improve the field. This creates a fast cycle of evolution where only ideas that actually perform better are released to the world. Second, researchers have a target of when they can release a paper. Generally, if performance on a given dataset improved by 0.5-1 percentage points, then a researcher would write a paper about it. While these may be small improvements, the improvements begin to stack as researchers attempt to outdo each other. Additionally, the small improvements allow many new ideas to proliferate and flourish, which can be used on other machine learning datasets or problems creating faster improvements to methodologies overall.

Implied in the leaderboard effect is that researchers and practitioners are sharing their findings, methods, and results with the community. The sharing of knowledge enables the rapid improvements in the field as researchers and practitioners build off one another. I heard someone comment recently that perhaps Google should not have released their original transformer paper because it allowed other organizations (such as OpenAI) to threaten their core business. My belief is that this is short-sighted because not releasing the paper would have restricted the growth of the machine learning field. The release of information also works both ways. I’m sure Google researchers have learned a lot and implemented techniques from the community that have improved on the original transformer paper.

Figure 1. A comedic interpretation of the different types of machine learning papers. Courtesy of Reddit.

Ease of Experimentation

If you want to study genetics, you don’t study people. You study fruit flies. Why? A fruit fly lives on average for 50 days. The lifecycle of a fruit fly is so much shorter that you can quickly learn which genes are relevant to different traits and ascertain how genes propagate through the population each generation. At the lower bound of human generation time, 22 years, you get an equivalent of 160 generations of fruit flies. That would take ~3500 years of testing on humans. The speed of testing is essential to finding insights and rapid improvement.

Machine learning research has been able to greatly decrease its cycle time by making lots of datasets openly available. This allows any researcher or practitioner to quickly load up a data set to benchmark their algorithm. This essentially removes the data collection step, which can take an overwhelming amount of time in other fields. Since the data can be reused for different algorithms, the time to experiment has decreased dramatically. Granted as a project scales, it will typically create its own data collection but openly available datasets allow machine learning practitioners to quickly spin up useable, impactful models.

To give you an understanding of the size and scope of available datasets, below is a list of some of the larger and more popular datasets used.

ImageNet (~14 million images): Large-scale image dataset for object recognition and classification.

UCI ML Repository (500+ datasets): Collection of databases for machine learning research and applications.

MNIST (70,000 images): Handwritten digits dataset for image recognition tasks.

CIFAR-10/100 (60,000 images): Image datasets for object recognition, with 10 or 100 classes.

SQuAD (150,000+ question-answer pairs): Reading comprehension dataset based on Wikipedia articles.

COCO (200,000+ images): Object detection, segmentation, and captioning dataset with complex everyday scenes.

TIMIT (6,300 sentences): Acoustic-phonetic continuous speech corpus for speech recognition tasks.

IMDb (50,000 reviews): Movie review dataset for sentiment analysis and natural language processing.

20 Newsgroups (20,000 newsgroup documents): Collection of newsgroup documents for text classification and clustering.

LFW (Labeled Faces in the Wild) (13,000+ images): Face recognition dataset with labeled images of people from various sources.

Yelp Dataset (8+ million reviews): Business, review, and user data for sentiment analysis, recommendation systems, and NLP.

GDELT Project (100s of TBs of news data): Global news dataset for event analysis, sentiment analysis, and text mining.

Enron Email Dataset (~500,000 emails): Email dataset from Enron Corp. for text mining, NLP, and social network analysis.

MS COCO (Microsoft Common Objects in Context) (200,000+ images): Object recognition, segmentation, and captioning dataset with complex everyday scenes.

ADE20K (20,000+ images): Scene parsing dataset with images and annotations for various indoor and outdoor scenes.

CelebA (202,599 images): Large-scale celebrity faces dataset with attribute labels for facial recognition tasks.

Kaggle Datasets (Varies by dataset): A platform hosting numerous datasets across various domains, such as healthcare, finance, and sports.

EMNIST (814,255 characters): Extended version of the MNIST dataset with letters, digits, and balanced classes.

Sentiment140 (1.6 million tweets): Sentiment analysis dataset containing tweets labeled with positive, negative, or neutral sentiment.

Google Books Ngrams (Over 2 trillion n-grams): N-gram dataset containing word sequences and their frequencies based on a large corpus of books.

Decreased Cost of Computation

I’d be remiss if I didn’t bring up the decreased cost of computation. The entire field of computer science has benefited from the cost of processing power plummeting according to Moore’s law, making it more affordable for researchers to experiment with computationally intensive algorithms and techniques. This has been particularly beneficial for the development of computationally expensive algorithms, such as deep learning models, which rely on large-scale data processing and often require substantial computational resources. Lower cost of compute also enables algorithms to run faster, further reducing the research cycle time.

Not only has the decrease of compute aided machine learning algorithms directly, it has also increased our ability to collect and store large amounts of data. Large datasets have powered a lot of the recent AI progress. It has been repeatedly shown that large data sets can improve machine learning performance. In fact, GPT systems as we currently know them would not be possible without an exorbitant amount of data.

Broad Applications

AI is powerful. It’s powerful because it is diverse in the areas that it can improve. An improvement in AI improves many other fields. The algorithms developed are horizontal in that they work for many, many different problems in many different industries. This enables machine learning to apply learnings from one industry to improve algorithms that ultimately benefit another industry. For instance, the facial recognition algorithms used to identify you in a photo have been repurposed to find tumors in medical imaging.

The cross-pollination of ideas and techniques has facilitated the rapid diffusion of innovations throughout the machine learning community. As researchers in various industries collaborate and share their findings, they are able to leverage each other's work to accelerate progress in their respective domains. This cross-pollination increases the rate of improvement. In effect, a positive feedback loop is created for machine learning research.

The horizontal nature of machine learning has also made it an attractive field for investment and research, further fueling its growth. As more organizations recognize the potential value of machine learning in addressing their unique challenges, they are increasingly investing in research and development efforts. These organizations increasingly share their model improvements, open-source data sets, or provide benchmarks that improve the field based on the earlier topics discussed. This influx of resources has led to a virtuous cycle of progress, as more researchers and practitioners are attracted to the field, leading to further innovations and advancements.

Lessons

While machine learning research is unique in some aspects, there are a few lessons that can be applied to broader types of work.

Shorten your cycle time as much as possible. Shorter cycle times enable you to find improvements more quickly. The OODA Loop is a great framework for this thinking.

Continuously track against benchmarks and metrics. Having a quantitative target to track against provides the ability to understand the impact different types of changes have. You can also make sure you are tracking in the right direction.

Focus on scalability and reusability. When developing solutions or processes, consider their scalability and reusability to maximize the return on investment and minimize redundant efforts. This also decreases cycle time.

Foster a culture of collaboration and knowledge sharing. Great ideas can come from anywhere and sometimes the best insights are provided by those who have a different frame of mind for thinking about the world.