Parallel Execution

Do more differently, faster

If you want to do something new, you have to stop doing something old.

-Peter Drucker

People are becoming frustrated. AI is working decently in their own workflows, but not scaling to fully automated organization impacting processes. Most AI projects are failing to produce ROI, that number being 95% according to an MIT report. And yet, to those of us in the trenches, the potential is undeniable. The question isn't if AI can create value, it is how. How can we make AI effective. I've written previously about where some of the bottlenecks are. Let's take a look now at some things that can drive value. We’ll discuss a real-world example you can use today and another example, a bit more at the edge, we can build towards. Both of these use cases revolve around the idea of parallel processing.

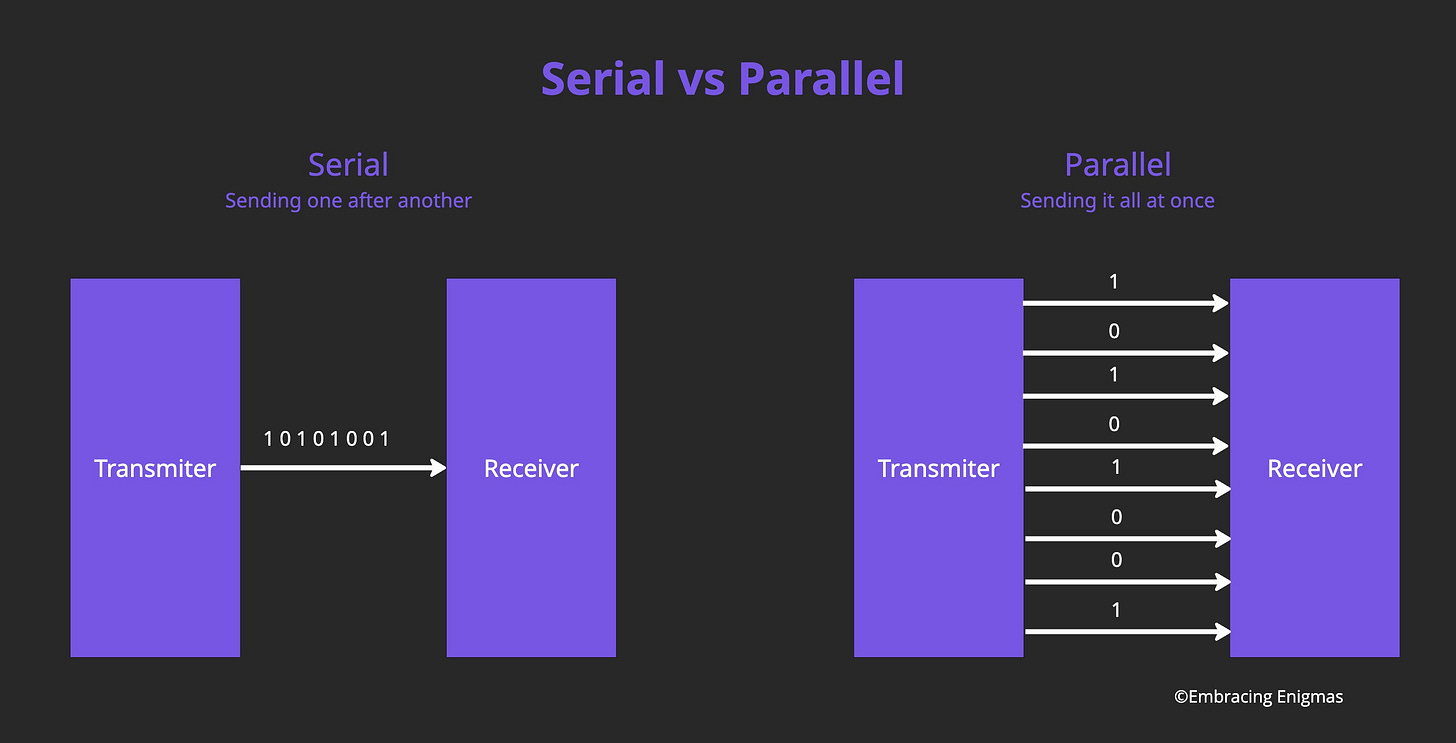

Parallel processing is the concept of multiple occurrences happening at the same time. Why is parallel processing important? It allows you to increase your capacity, in layman’s terms, to do a lot at once. To best take advantage of the speed of AI automated systems, you typically need to remove manual input bottlenecks. While manual inputs are important for avoiding certain failure modes, when there are too many manual points they will control the overall speed of your system, making it unlikely you realize any gains from using AI.

Parallel Coding

If you haven't heard about Claude Code, it's an agentic coding tool that can help create a massive amount of output for a developer in a short amount of time. Agentic coding differs from AI augmented development in that the coding agent takes multiple actions through tools while trying to solve a problem. This allows an agent to troubleshoot issues, test the code it has written, and even interact with external infrastructure by running multiple commands to reach a better outcome.

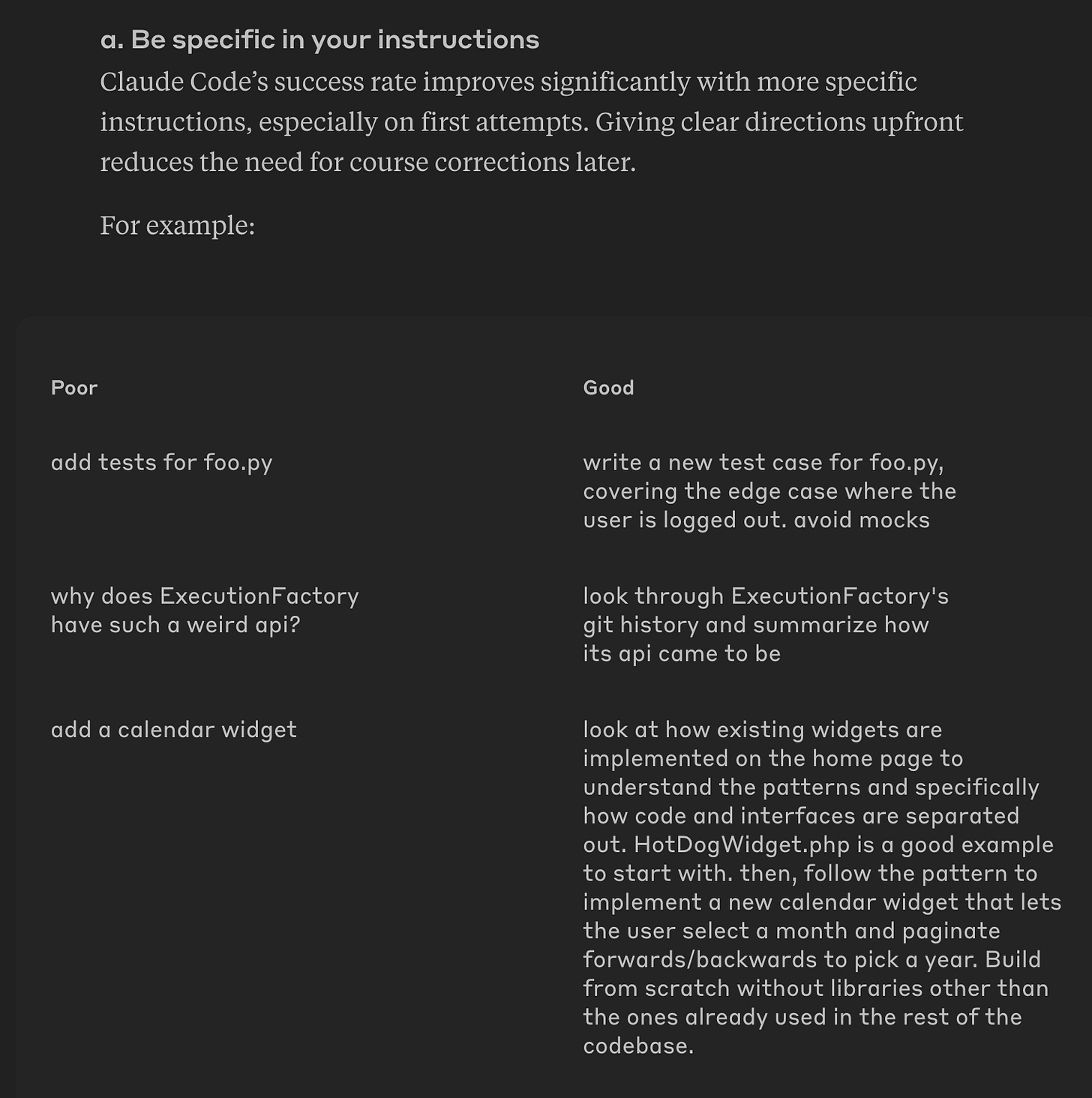

Earlier this year Anthropic released their guide on best practices for using Claude Code to maximize functionality. Think of GPTs as a new type of computer and the conversation is how you program them. Similar to what I’ve written about before, their guide explains that you need to articulate well what it is you want. You can see this in the examples Anthropic uses to achieve better results:

Claude Code really shines because it provides the ability to chain many commands together without back and forth input cycles, allowing you to eliminate waiting time while you focus on parallel execution. What do I mean? In the best practices guide there is this little gem likely often glossed over but that I found critical for obtaining maximum benefit:

b. Have multiple checkouts of your repo

Rather than waiting for Claude to complete each step, something many engineers at Anthropic do is:

Create 3-4 git checkouts in separate folders

Open each folder in separate terminal tabs

Start Claude in each folder with different tasks

Cycle through to check progress and approve/deny permission requests

That's right, run multiple instances of Claude code at the same time on the same repository. Don't have it operate on the same file base. Create multiple copies of the repository on your device, and have each Claude code instance operate on different git branches. This has the effect of acting like 3-4 separate developers. I've found I can burst manage ~6 instances simultaneously. There are lots of controls and usage patterns needed to make this work well, such as getting the right CLAUDE.md file setup. However, the implication is clear, even if the tools are only half as effective as you (though they can be much more), running multiple instances allows you to at least double your output.

This results in us caring about attention capacity. How many items can your mind hold in attention simultaneously AND what should your attention be focused on? Using agentic coding tools shifts the way engineers work to a more player-coach type role. They are ultimately responsible for their outputs and designs but they are also managing a team of helpers, needing to direct them to accomplish tasks correctly.

Parallel Business Process Discovery

We know AI will force the creation of new and different ways to operate a business. But what does that mean? What do those new structures look like? How could we extend the idea of parallel processing to creating a better business organization? Most businesses are already running processes in parallel, that's why so many people are employed to do similar things, they enable processing capacity. But how do you know those business processes are the best ones, the most effective?

What if you could create a digital twin of every business process? That is, a digital form where you could test different configurations and observe the outcomes. One of the advantages of AI and ML models is the ability to explore many different possible avenues to come up with the best one. Is it possible to run multiple, different versions of your business process in parallel? Can you imagine a world where you simultaneously run 10, 20, 100 different versions of the same process to identify the best form under different conditions. The magic here is that you can run digital documents/decisions/actions through different types of processes to understand the effect they have.

Let's play with that concept for a bit. Imagine you have a system for processing invoices. Some of them are digital and some of them are paper. Your current (very naive, high level) process looks like:

Send digital invoices to team A and paper invoices to team B.

Level 1 team members review if the services were actually delivered and send to Level 2 team members.

Level 2 team members check the work of Level 1 team members and sign off. Then they send the invoices to Level 3 team members.

Level 3 team members determine when and if to pay based on available cash while balancing relationship implications. Then send to Level 4 team members.

Level 4 team members issue payments.

Is this the most effective way to do this? What if, using AI, you digitally simulated and tested the following variations:

Level 2 team members can issue payments below a certain amount, all invoices above a certain amount immediately go to Level 3 team members.

Level 2 team members don't check every invoice passed to them by Level 1 team members, but only a random 5% sample.

Remove Level 3 team members and allow Level 4 to make implication decisions?

Create a rule-based system for determining payment terms that removes both Level 3 and Level 4 team members.

Group the invoices by customer, vendor, or project instead of by time for bulk processing.

Immediately pay vendors with a proof of delivery payment system.

Create a risk assessment system for each vendor, where lower risk vendors require only Level 1 team members and high risk vendors require Level 3 approval.

Process the invoices on one day per month instead of on an as-received basis.

Put false information into 5% of the invoices to see how well Level 1 team members catch errors.

Scan all paper invoices into digital versions and combine both teams.

The beauty of this system is that you can take the same invoice and run it through each process to determine which is best for a particular invoice. Do this a thousand times or more and you can evaluate each process variant on the effectiveness of its outcomes, cost, and risk. The best performing process can then be implemented. You can take this a step further and determine the best process given the current state of the company (cash rich vs cash poor), current macro environment, current acceptable risk levels, or availability of electricity.

Some might not see this as efficient. How could running the same process 20 different times for the same unit be cost effective? On a unit-by-unit basis it won't be. But what you are paying for is exploration to identify a better outcome. How much would you pay for a 1%, 2%, 5%, 10% improvement on your existing process? Use that amount to size the exploration budget you are willing to fund to find a better outcome.

The idea of an exploration budget can lead us to a notion of anti-efficiency. That is, how many ways can we simultaneously process the same set of units to determine the best way to process them? In contrast to an efficient system that is benchmarked on maximum output for lowest cost, an anti-efficient one is benchmarked on how many versions or paths it can explore to find the optimal one. Unfortunately, this is not a metric you want to continually maximize as there are limits and thus a sweet spot where an anti-efficient system is most effective. This is a tradeoff between the cost of exploration and an obtainable gain. You just have to eat the cost of exploration budget in order to find the magic outcomes.

Unification

Applying AI doesn't mean waving a magic wand to have AI magically do stuff. Applying AI well requires changing the way you operate. The emerging new ways of operating center around applying AI in parallel ways. One method we focused on was to identify ways to add more parallelism to workflows. The first use case, agentic coding, is more local. This use case adds parallelism to the individual. One needs to shift their mindset from cranking out code to managing lines of work, effectively lengthening the hierarchy of the organization. The second use case, using digital twins for parallel testing, provides a counter-intuitive way to find a more effective process. By creating duplicate processes but with variations, we search for a better way of doing things. In both use cases, we are modifying the very structure of how the organization works. Industry leaders will be the ones changing the way their organization is designed, not just claiming to use AI.

If you've found new design patterns for applying AI that work great for your organization, I'd love to hear them.