It is not a mistake to use logic without statistics.

-Nassim Taleb

A current issue I am frequently hearing about for potential AI products, features, and use cases is investors, market participants, and practitioners asking "Won't OpenAI just build this?", "Isn't Stable Diffusion going to build this into their system?", "What's stopping Bard from implementing this?", and a thousand other variants. There are multiple areas of past patterns we can draw from to understand that this type of thinking is inherently flawed. Good examples can be found in the areas of biology, history, and systems engineering. Let's dive in and understand how to logically understand the current state of AI, where it's going, and how to properly think about where the world is headed.

The Zygote Fallacy

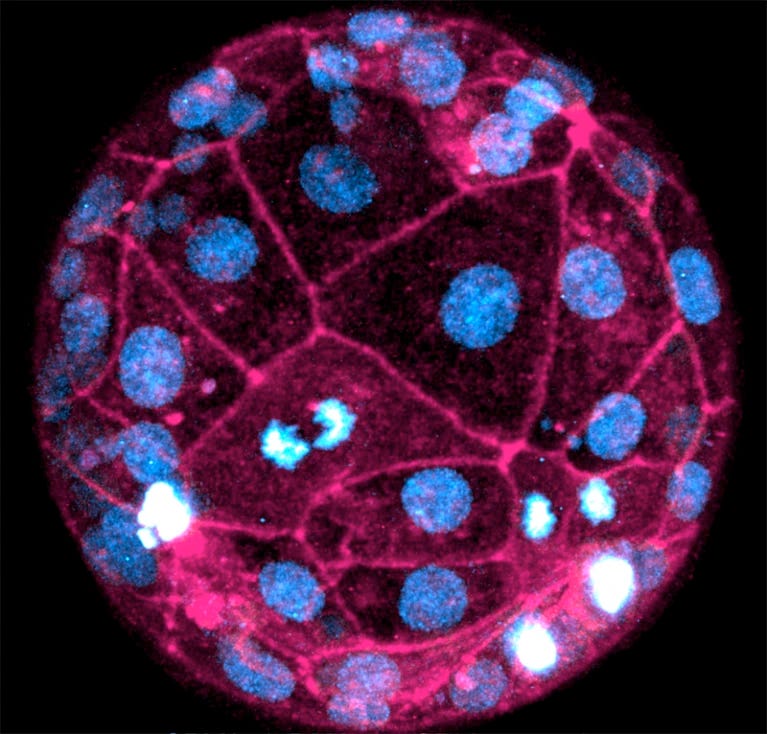

This is a picture of a Zygote, which forms when a sperm meets an egg.

Figure 1. A zygote from the view of a microscope.

This is a recent picture of the highest resolution photo taken of an embryo.

Figure 2. The highest resolution photo of an embryo taken to date.

These are pictures of humans in various stages of life.

Figure 3. Examples of human beings at various stages of life.

The time between a zygote to embryo is five to six days. The time from an embryo to an infant is typically ~40 weeks. The time from embryo to a fetus, which somewhat resembles an infant, is about eight to nine weeks. What occurs are very rapid changes that affect the overall shape of the system. While the zygote appears similar in shape to an embryo, it in no way resembles the end product - a human being. The state of AI is currently in a similar state to the zygote.

Here's the mistake I see people making in regards to AI. They believe that what is occurring right now is similar to what will occur in the future. That is, the same players will exist, the same ecosystem will be in play, the same capabilities will exist, and the same modes of operating will be there. They believe that AI a year from now will look very similar to the state of AI today. They believe that a zygote will end up looking like an embryo instead of a human being. We can define the Zygote Fallacy as such:

The Zygote Fallacy is the incorrect belief that the present state of a system will resemble the medium to long term state of the system, particularly in rapidly changing environments. This incorrect belief is further exacerbated by near term changes that appear to confirm a prediction. In short, incorrectly extrapolating the present.

Extrapolating the present in rapidly changing conditions is destined for failure. A lot of people making this mistake are thinking that advancements will only occur from a handful of companies that have already made the biggest advancements. It's kind of like saying those that are out ahead early in the race will win.

Generating an Ecosystem

If we think about the state of AI from the terms of the Zygote Fallacy, what are a lot of people missing? They are misdiagnosing the state of how new technology systems evolve. Within a market, many different players arise to fill different roles and needs. Natural systems give rise to specificity for various environments in order to lower the energy requirements of the organism. In a similar fashion, many different companies arise to acquire and transform various types of resources.

Figure 4. The social media landscape in 2012.

Figure 5. The social media landscape in 2023.

Let's rewind the clock and take a look at the social media landscape in 2012, over a decade ago. Figure 4 shows the state of the early social media ecosystem. You can figure out who was most successful in this space by comparing to Figure 5, which shows the social media landscape now, eleven years later. A few observations. First, the number of entities has grown tremendously. Second, it was non-obvious who would win, nor how it would happen. Meta appears to have won but only due to acquisition of other platforms. Facebook, which got Meta started, is in decline with Instagram picking up the slack. Third, entities that might have seemed like shoe-ins at the time, like Google+, don't even exist anymore. Fourth, the range of functionality covered has also increased.

These ecosystems take time to grow, but they do grow. AI will be no exception. Here's a heuristic. Humans can do a lot of things but no human can do everything exceptionally well. No person is simultaneously the best marketer, the best swimmer, the best basketball player, the best weightlifter, the best parent, the best artist, and the best mathematician. The claim that a single AI will be able to do all things well before we've reached AGI is laughable. AGI is only relevant to show that currently AI can't even do all that humans can. When we do hit the point of AGI, it seems naive to think there wouldn't be multiple competing AGI's.

So, when people ask me "well why won't Company X just put it in their product?", I believe what that person is really stating is that they don't think an AI ecosystem will evolve. This idea has been proven to be false time and time again. There are ecosystems in every single market. It's virtually impossible for a company to function without other vendors. Companies do not have infinite resources to build everything. Even if they use AI to build and improve themselves, they are still bound by cycle times to get sufficient response from the market. When we think about how AI markets evolve, we need to be cognizant of typical growth, biodiversity, and resource constraint patterns.

Betting on Discontinuity

If you want to succeed in the rapidly changing environment of AI, you have to bet on discontinuity, not extrapolated continuity. That is, you have to assume that the current environment is going to change drastically from how it looks now. However, that does not mean you should disregard historical patterns of how general systems evolve through various states of life. In fact, many different types of systems evolve in similar patterns. There are even fields that study such behavior.

Here's another heuristic to help assess if an AI platform will build X product. Is that product inherently a multi-platform problem? If it's a multi-platform problem such as maintaining consistency across platforms, translating between platforms, or assessing which platform performs best for which conditions then it might be built by said platform but it won't have nearly the same level of effectiveness. It won't be effective because the platform will bias towards itself, losing trust and confidence, and losing the ability to be a best in class solution.

The state of the AI market is such that many, many more players will arise to fill various niches. It is not in a state of optimization where you can keep making transistors smaller or databases faster. AI is in a state of exploration. Play the field in front of you and plan your actions according to the environment.