Unintended Data Poisoning

Are we headed towards a world with less signal?

“There are downsides to everything; there are unintended consequences to everything.” -Steve Jobs

What a week for AI! Whether to show the pace of technology or to fight for a share of marketing voice, the number of AI technologies that dropped last week was bewildering.

GPT-4 was released with a middle finger to researchers.

Google announced PaLM API

Anthropic released Claude

Stanford released Alpaca.

Microsoft is putting ChatGPT into all of its products.

Google is putting Bard into all of its products.

PyTorch 2.0 was released

Midjourney V5 was released

Even for those working in the field, it is difficult to keep up with the pace at which everything is progressing. I think we've finally hit the initial point of Cambrian explosion for AI. Buckle up because it's only going to get crazier.

At this point it's pretty obvious that AI tools are going to permeate the world. This has me concerned but not for the reasons you might think. I'm concerned for the second order effects. Here's my dilemma - if almost everyone is using AI tools to help them create content, are we going to lose meaningful signal in the world? DeepMind put it well in their paper when they said,

Machine learning is used extensively in recommender systems deployed in products. The decisions made by these systems can influence user beliefs and preferences which in turn affect the feedback the learning system receives - thus creating a feedback loop. This phenomenon can give rise to the so-called “echo chambers” or “filter bubbles” that have user and societal implications.

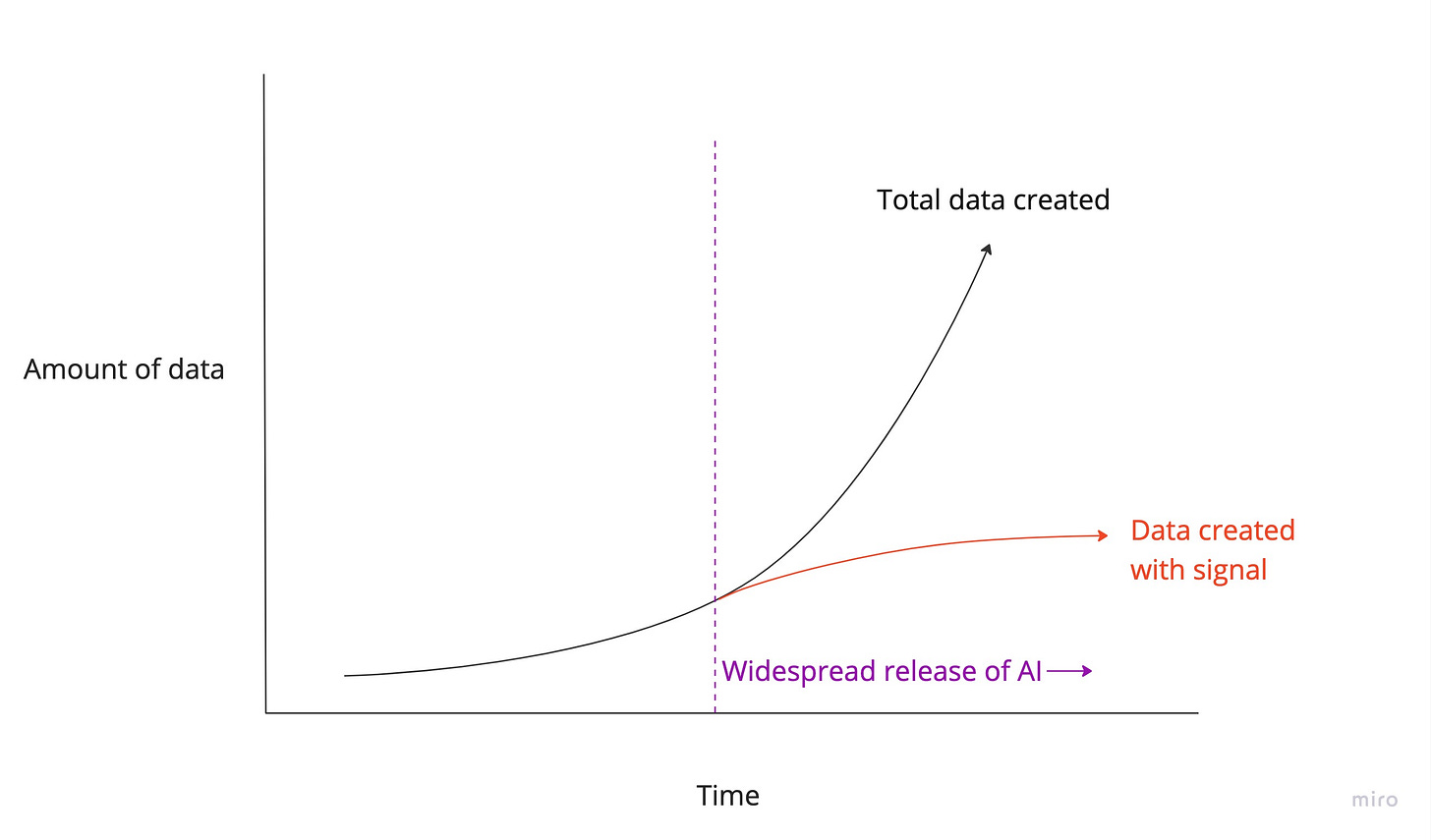

Extending this to generative AI, with the cost of creating content plummeting towards zero, we will be generating data at a rate even faster than before. However, most of this data will be noisy and repetitive in aggregate. Since this content is based on the outputs of models, and those models keep taking in all the data that can be found, there will come to be an artificial reinforcing behavior in the models. That is to say, we are poisoning the well of data for training by creating so much generative content. Either the models will be forced to not rely on that content or they will eventually struggle to improve, the exponential rate of data having halted without any true signal being left in the data. This scenario might look something like this:

Figure 1 - How data might change over time due to the usage of AI

What does it mean if the rate of true signal is decreasing? It means that the ability of these AI systems to create novel things or to create content that truly resonates with individuals may decrease over time. The outputs of these models may become less useful over time as people become accustomed to the outputs of the models and without a meaningful way for them to find new content. This could potentially reduce the usefulness of some models unless different, better, and less data hungry architectures are found. Obviously, this will not make all AI useless but it does seem like another flavor of data poisoning.

Data poisoning is a concept within AI/ML whereby some data is altered, either maliciously or accidentally, to modify an AI/ML system for some benefit unknowingly to the owner. These benefits could be things such as hiding a secret key within the weights of the model to grant unwanted access or approval, such as in a loan determination, or to prevent a model from breaching privacy by providing images that corrupt the training process around your face. Here’s a quick visual on how data poisoning works:

Figure 2. A targeted data poisoning attack where an attack modifies a model to gain unverified access to a system. Courtesy of “Not All Poisons are Created Equal: Robust Training against Data Poisoning”.

Figure 3. Where poisoned data can come from in real world systems. Courtesy of “Not All Poisons are Created Equal: Robust Training against Data Poisoning”.

The danger of data poisoning is that it has unknown or unintended impacts on your model, typically to the owner’s detriment. Currently, research into data poisoning is a bit nascent but cybersecurity research on AI/ML models is increasing rapidly as these technologies become more woven into our society. Here's some interesting researching that's occurred in this domain:

Not All Poisons are Created Equal: Robust Training against Data Poisoning talks about how to create defenses that mitigate data poisoning. Slides here.

Prompt injection attacks that cause LLMs to process poisoned data and either modify models or execute malicious code.

A review of the different threat models for data poisoning attacks on machine learning models.

Image cloaking for privacy protection from facial recognition models.

Undetectable backdoors in machine learning models. “We show how a malicious learner can plant an undetectable backdoor into a classifier. On the surface, such a backdoored classifier behaves normally, but in reality, the learner maintains a mechanism for changing the classification of any input, with only a slight perturbation. Importantly, without the appropriate “backdoor key,” the mechanism is hidden and cannot be detected by any computationally-bounded observer. We demonstrate two frameworks for planting undetectable backdoors, with incomparable guarantees.”

These data poisoning problems aren’t theoretical. PyTorch, one of the main technologies used in creating AI systems, underwent a supply chain attack this past December whereby the PyTorch installation was infected and would have allowed an attacker to change the weights on any model of an infected machine or insert additional data into training.

Looping it all back together, there is serious concern that, along with their widespread adoption hurting their future effectiveness, AI models will be more vulnerable to attacks in the future. Traditional data poisoning is typically done to damage an existing system. Overuse of existing AI models threatens to reduce the available signal to improve models unless a way to negate the feedback loop can be found.

It is becoming increasingly clear that cybersecurity is going to become a major part of AI development and usage. There will be unintended consequences from AI use that affect the training of future models. Unlike systems of code where we can identify and patch vulnerabilities, there is a large amount of difficulty involved in securing large AI models which are naturally harder to understand. When something goes wrong or you identify an exploit, how do you patch a large language model? If it costs $10M to train, are you going to spend the money to retrain it again? Are you going to retrain each time someone finds an exploit? At the moment, the economics of harming these models is greatly in favor of the attackers.

Data has always been fundamental to the creation of AI systems. As large AI models take more actions and the systems are closed off to outsiders, we are entering a stage where the data fed into an AI system will become the prominent threat vector. Due to widespread adoption and outsized impact, AI systems will be vulnerable to those trying to manipulate existing systems for their advantage. Additionally, AI systems used to generate content will be at risk of reusing data generated from the system to create a degenerate feedback loop and reduce the effectiveness of the system. It is best to start implementing and testing validation systems now to prevent these kinds of disruption to your system.