Get the fundamentals down and the level of everything you do will rise

-Michael Jordan

With all of the hype in AI, it’s important to realize that all the lessons, paradigms, and rules we’ve learned about software engineering and machine learning still apply. If you think you can just take an AI model off the shelf and create a defensible product around it without good engineering, you are in for a world of hurt. As this incredible essay by Chip Huyen discusses, there are a lot of issues with bringing large language models into production. If you are in the field, I highly suggest reading it but I have pulled out this brief section about issues with bringing LLM’s to production:

First, the flexibility in user-defined prompts leads to silent failures. If someone accidentally makes some changes in code, like adding a random character or removing a line, it’ll likely throw an error. However, if someone accidentally changes a prompt, it will still run but give very different outputs.

While the flexibility in user-defined prompts is just an annoyance, the ambiguity in LLMs’ generated responses can be a dealbreaker. It leads to two problems:

Ambiguous output format: downstream applications on top of LLMs expect outputs in a certain format so that they can parse. We can craft our prompts to be explicit about the output format, but there’s no guarantee that the outputs will always follow this format.

Inconsistency in user experience: when using an application, users expect certain consistency. Imagine an insurance company giving you a different quote every time you check on their website. LLMs are stochastic – there’s no guarantee that an LLM will give you the same output for the same input every time.

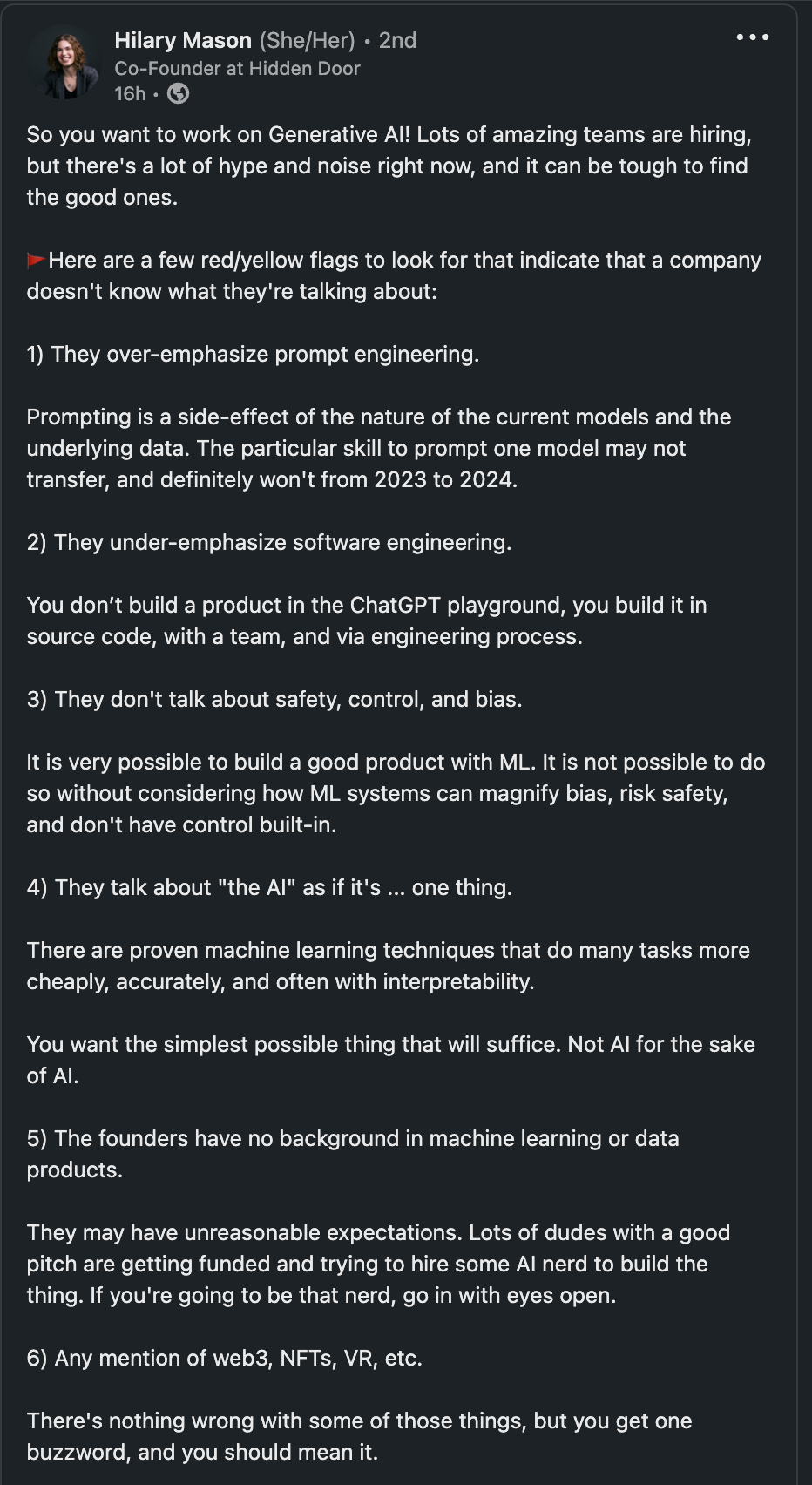

It was in a similar vein that I was struck by this post from Hilary Mason. She highlights a bunch of issues and red flags around claims from companies using generative AI (see Figure 1 below). Let’s break down why each of her points is important and explain them in greater detail.

Figure 1. Hilary Mason’s LinkedIn post about red flags within generative AI.

Prompt engineering

A good mental model for GPT-like models is that they are a new type of computer where English is the coding language. As opposed to typical software code, GPT models are stochastic and therefore, we do not have a good way to estimate what the outputs of a model will be from a given prompt. That’s where prompt engineering comes in. Prompt engineering is a term that encompasses the answers to the question, “how do we take this stochastic system and make the outputs more deterministic?”. Unfortunately, prompt engineering tends to be model based. Some prompts work well between high level models because both rely on lower-level embeddings or models, such as CLIP. As new models come into play, the best practice prompting techniques will change and have to be rediscovered. This is a shaky foundation on which to build production level products on.

While prompt engineering is a necessary evil at the moment, it is hard to see it as a core differentiator to a product. This tacit technician knowledge does not necessarily translate into the ability to build a better system. If you are solely focused on prompting, you’re also relying on external systems and will probably have scaling issues as you get bigger per the points in Chip Huyen’s post. There’s a lot more that goes into building a great product than prompting engineering. Particularly you need to have a heavy emphasis on high quality software engineering.

Lack of Software Engineering

Machine learning is a team sport. You need data scientists, engineers, designers, and product focused individuals to bring models to life. As with all data science, machine learning, and AI projects there is a high engineering load to create production level code, data flows, and products around the models. Models are useless if you don’t have a way for people to interact with them. Doing this requires a large amount of software engineering. Typically more person-hours are required for the engineering than the actual modeling. Having an LLM does not change that requirement. In fact, many companies do not use state-of-the-art methods in their customer facing products because of the engineering difficulty.

The Netflix Prize was a famous $1 million challenge to see if anyone could create a better recommender algorithm to beat the internal Netflix recommendation engine by 10 percent or more. A few teams did and Netflix paid out the prize. However, Netflix never implemented the winning models because of the engineering difficulty of bringing the models to production. Think about that. Netflix paid out $1M for a model that could improve their performance by 10% and their conclusion was that the additional engineering cost would not be worth the business impact gained. While the marketing benefit was probably worth it for Netflix, it is always a good idea to estimate the business impact of your models.

Safety, Control, and Bias

To me, safety, control, and bias really boils down to taking the time to verify the outputs of your system and to understand the impacts it is having. Verification is crucial for any machine learning system to do well and be trusted. If you don’t have sufficient guardrails in place around your models, you’re going to have a bad time. Microsoft’s Tay model can attest to that.

As AI regulation is likely to come to pass in the near future, this point will become more important. If a team isn’t already thinking about how to account for verification, safety, control, or bias in their system you should be alarmed. By year end, it will likely be a requirement for larger systems to be subject to some sort of regulation. If your company is a consumer of one of these larger systems (GPT4, PaLM, etc), you need to be prepared for the impact to your own system. It’s possible that the API calls to the these larger systems could change due to these regulations. Is your system prepared for those changes?

Singular AI

Machine learning has been around for a long time. The models, methodologies, and techniques that go into AI are vast. Each of those components was built to solve a specific problem and typically does so very well. Large language models (LLMs) do not solve all problems. For instance, they can’t solve optimization problems and they can’t forecast numerical values. There’s a lot of gains that can be found from just using the right solution to the right problem. If a company keeps calling AI a single thing, it’s likely the founders or leaders of the company don’t come from an ML/data background.

Lack of ML/Data Backgrounds

This should be self-explanatory but if you want to build a technical product, you need technical leaders. In business, there is often the question of if you should buy a product/service or build it yourself. If you are unable to build a product, your only option becomes to buy. If you can only buy, you are limited in your options, defensibility, and ability to innovate. You don’t want to be in a position where your company no longer functions if you lose API access to the sole model you are relying on.

The flip side to that is even if you hire technical leaders, will management understand the timelines required to accomplish different types of tasks and prioritize tasks that are important to making a product reliable. For instance, are they going to understand the importance and amount of time needed for data acquisition, preparation, and cleaning? Do they understand the right mix of individuals needed to bring a data product to life? Are they well-versed enough to mitigate model risk and set up alternative systems for customers?

Buzzwords

C’mon. If you’re using all the buzzwords something is off. Not because the idea might not be there but because you likely haven’t actually recruited all the people with the ability and resources to execute on an idea in multiple cutting edge areas. In all likelihood it’s a pipe dream.

Fundamentals

People like to rely on frameworks that were usually created during a static period of time for a certain situation. When the environment changes, it causes those that were over-reliant on an unsound framework to become flustered and have a hard time adapting to the changing situation. That’s why it is best to think in terms of first principles (or fundamentals) and build up your understanding from there. Thinking from first principles allows you to adapt to new environments and conditions. This is what is happening to a lot of people in regards to AI. The impact of change is throwing their world view out the window because cost structures and capabilities are changing dramatically. However, your product still needs to bring in more money than it spends to be profitable. It still needs to be something people find useful. AI models still need quality data to make predictions.

If you want to build great AI products you need to be good at the fundamentals. This is why all great engineering curriculum starts from first principles. So that you can build up to anything as times and technology change while the physical rules of the universe remain unchanged. AI is no exception. While there are many new products, frameworks, and technologies being released, in order for them to have staying power they need to be fundamentally sound.