"Trust, but verify" - Proverb

As ChatGPT and other generative AI systems continue to permeate the psyche of the world, people are beginning to lose their collective minds on the potential implications. There's the English teacher that thinks ChatGPT will end high school English. Then there's a deep dive on how the ease of deepfakes can make it trivial to generate harmful fake photos. Others are calling ChatGPT a 'virus that has been released into the wild'. Meanwhile the New York Times is asking if you can trust chat bots. People are worried about how they can trust content going forward.

As someone who is in this space day to day, it's a little strange that the question of trust is popping up now. The technology underlying ChatGPT, called GPT-3, has been available to the public through an API for about a year now. Deepfakes, in their current form, have been around for about five years. Security expert Bruce Schneier wrote in 2018 about the arms race between technologies to create fake videos and to detect fake videos.

The issue of trusting content has been around for a while but is suddenly on everyone's mind. Why? Part of the lag is based on how technological adoption grows. The other part is that ChatGPT has a more accessible user interface, making it easier for regular individuals to interact with the system instead of having to make code calls to an API. I previously wrote about how user interfaces will be a competitive advantage for generative AI companies. You can see that in action with the difference in how ChatGPT usage is exploding vs GPT-3. Now that usage and prevalence have exploded, the question of what we can trust is front and center.

We are quickly approaching a state where we are unable to trust that content was not generated by a computer. We need a way to restore this trust or to delineate between what has been created by a human vs a computer vs a human aided by a computer. What options do we have?

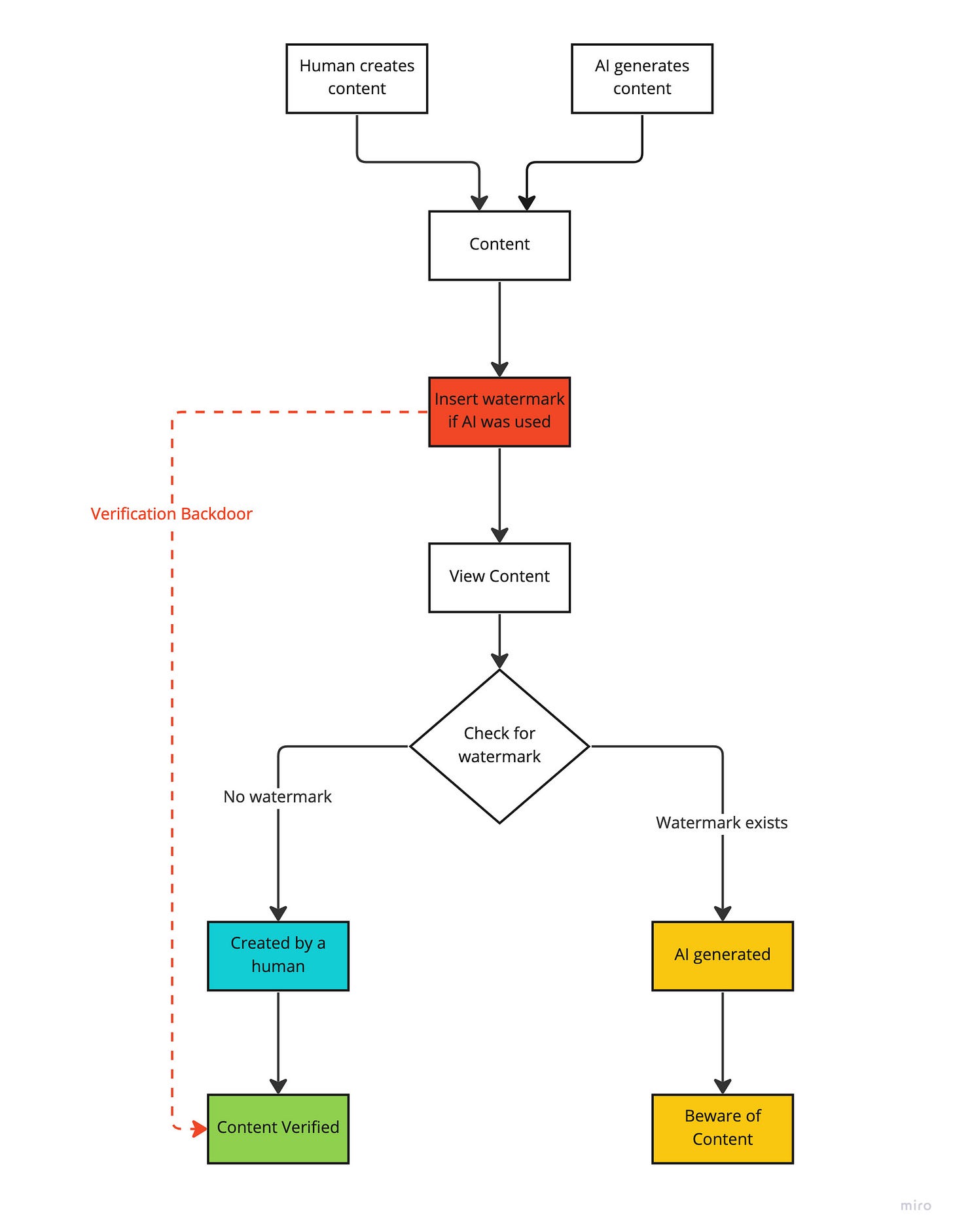

Watermarks

We could force any generative AI system to place watermarks on what is generated so it can easily be spotted. That's the position that the Washington Post argues here. China is also taking this approach and moving towards banning any AI generated content that does not include a watermark. The issue with forcing watermarks is that it relies on trusting system creators to actually implement a reliable solution. In effect, it assumes that if a piece of content does not have a watermark, then it can be trusted. However, these systems can be run offline and without needing to go through a major provider, which reduces the ability to enforce this policy. The watermark framework might look something like Figure 1 below. As you can see, if you don't insert a watermark, then you can't tell that the image was generated with AI.

Figure 1. Watermark framework

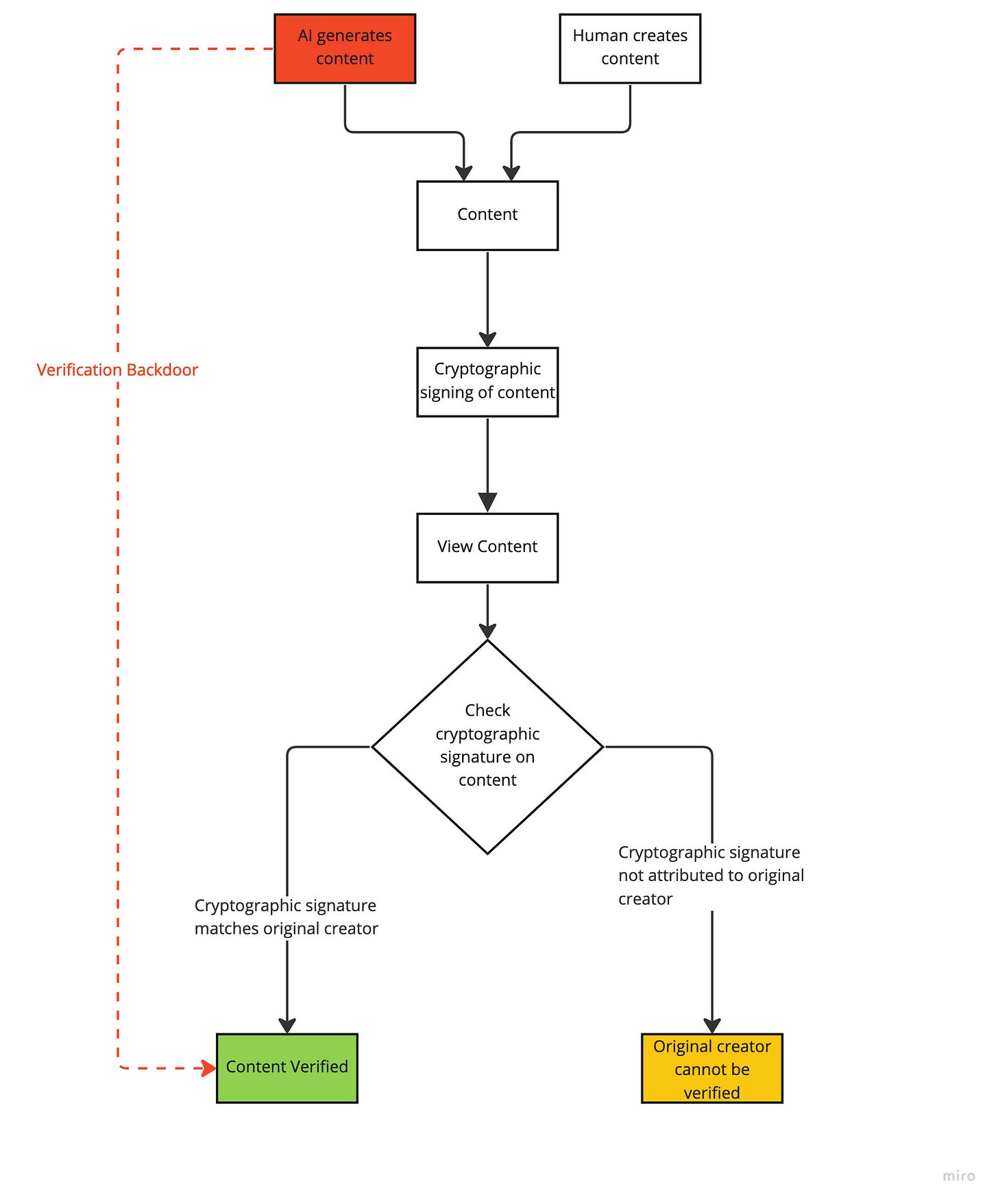

Digital Signing

Alternatively, in a framework for digital signing we can 'sign everything' as Fred Wilson argues. Digital signing means that we cryptographically sign all content that gets created before it becomes viewable by others. The purpose of digital signing is to show ownership, lineage, and check for forgery on a piece of content. While this is probably a good start, it does not prevent a very simple example: If a piece of content is generated offline using AI and then posted to a blockchain, as Fred does with his blog posts, how can you determine if the source was or was not generated using AI? Digital signing assumes the source providing the information is true and any later modification is tracked. This has the potential for a backdoor in the actual creation of the content prior to signing, as shown in Figure 2 below.

Figure 2. Digital Signing Framework

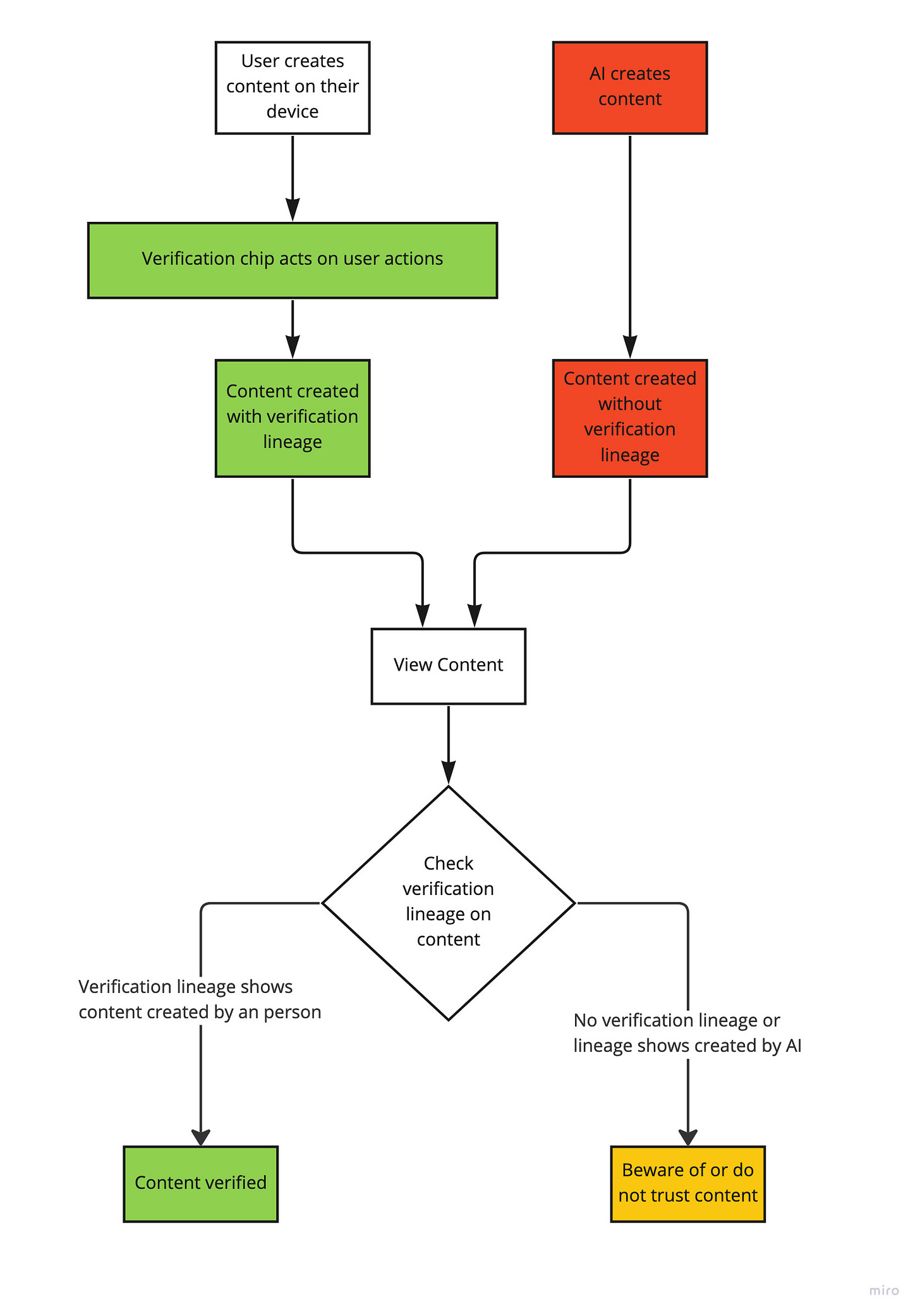

Verification Chips

We need to go one step further and build a zero trust framework for consuming content. The concept behind zero trust is "never trust, always verify". In this context it means that no content should be trusted until it has been verified. How can we actually implement this? The answer is verification chips.

What is a verification chip? A verification chip is a piece of technology embedded within every electronic that has the potential to generate content (a laptop, phone, camera, keyboard, etc) with the purpose of cryptographically signing all actions (keystrokes, mouse movements, program actions) to a blockchain thus verifying that content has been created by a person instead of computer/AI generated. This might be one of the first legitimate uses for the blockchain. To be clear, I do not think these devices exist yet unless one of the major chip manufacturers has them in their R&D. The idea for verification chips, as I first heard of them, comes from Ned Beauman in his book "Venomous Lumpsucker". In "Venomous Lumpsucker" verification chips are routinely used in a near-future society to show that photos and video have not been faked.

How do verification chips solve the issue of trust? They log the content creation process through cryptographic signing which can be used to verify that yes, this photo you are seeing was taken from a specific device and not AI generated, or that yes, a human pressed buttons on a keyboard to write this essay and that it was not generated by ChatGPT. Why is this a better process than watermarks or digital signing? We don't trust something unless it can prove it was generated by a person. The watermark framework fails to establish trust because we say something is authentic if there is no watermark, but those can be left off or potentially removed. The digital signing framework fails because we have no way of knowing if the source content was generated with AI or not. The verification chip framework succeeds in establishing trust because a verification lineage is created from every keystroke, mouse movement, and program action and then cryptographically signed to show that a human created the content.

Figure 3. Zero Trust Framework

As you can see in Figure 3, the verification chip framework works by being embedded within the process of content creation. This prevents AI content generation from having a verification backdoor, unlike the watermark and digital signing frameworks. Thus, we create a process by which content is legitimate only if it can be proven to be created by a human.

As we enter the next few years on the cusp of an explosion of AI, we need ways to trust the world around us. A zero trust framework appears to be the best option. Verification chips that work as far upstream in the content creation process appear to be a good option but there may be others. Until we can find a way to prove that content is legitimate we will continue to question whether the content we are consuming was created by a person or artificially generated.

P.S. There are legitimate uses of AI generated content in partnership with human creation for which was not the focus of this article.